Web Scraping Tutorial: A Guide for Beginners

Picture this: Instead of tediously sifting through web pages for information, we have the ability to extract data automatically from websites. That’s the magic of web scraping! As someone who is just starting to explore the world of web scraping, you may have heard about its potential to mine valuable data from websites.

In this digital age, web scraping has become a game-changer, enabling us to collect massive amounts of unstructured data for various purposes, be it data analysis, research, or business insights. In this Web Scraping Tutorial, I’ll be taking you through the basics of web scraping step by step, making it easy for beginners to grasp.

What is Web Scraping?

Web scraping, to put it simply, is the process of automatically extracting information from websites.

It involves parsing HTML or XML documents to retrieve specific information and allows developers to programmatically navigate websites, extract desired data, and facilitate data analysis and research.

Is Web Scraping Legal and Ethical?

Before we dive in, it’s essential to understand the legal and ethical aspects of web scraping. While web scraping itself is not illegal, its usage must comply with the website’s terms of service(ToS) and relevant laws.

Key Takeaways:

- Always check a website’s “robots.txt” file to see if it allows scraping.

- Additionally, avoid overwhelming a website’s server with too many requests, as this could be considered unethical and may result in IP bans.

How Web Scraping Actually Works?

Web scraping begins with mining URLs, which are the addresses of specific webpages, to initiate the data extraction process. Here’s a technical overview of how web scraping works:

1. URL Mining:

The first step is to identify the target website and gather the URLs of the webpages containing the desired data. URLs serve as gateways to access the information stored on those web pages.

2. HTTP Request:

Once we have the URLs, web scraping involves sending HTTP requests to the web server hosting the website. This request is made from the web scraper, acting as a web client, to fetch the content of the webpage.

3. HTML Parsing:

The server responds with the webpage’s HTML code, containing the data we seek. Using web scraping libraries like Beautiful Soup or Scrapy, the HTML code is parsed into a structured tree-like format, known as the Document Object Model (DOM).

4. Element Identification:

The DOM allows us to navigate through the webpage’s structure and locate specific HTML elements (such as headings, tables, or paragraphs) that contain the data we want to extract.

5. Data Extraction:

Once we’ve identified the target elements, we extract the relevant data from the HTML tags. This data can be in the form of text, images, or even links to other webpages.

6. Data Processing:

The extracted data may require further processing, such as cleaning and formatting, to make it suitable for analysis and storage.

7. Storage or Analysis:

Finally, the scraped data can be stored in a preferred format, like a CSV file or a database, for future use. Alternatively, it can be directly used for data analysis, research, or other applications.

The Must-Read Files Before You Dive In

Before starting, familiarize yourself with key files providing valuable information for responsible scraping. Understanding these files ensures ethical, legal, and compliant scraping activities.

1. Robots.txt:

The “robots.txt” file tells web scrapers which parts of the website they can access and which parts they should avoid.

2. Website Structure and HTML/CSS:

Get to know the website’s structure, HTML tags, and CSS classes. Understanding how it is organized helps you find the elements you want to scrape easily and efficiently.

3. Terms of Service (ToS):

The Terms of Service is a legal document that explains the rules for using the website. It may have rules about web scraping. Reading it helps you know if scraping is allowed and follow the website’s policies.

4. Privacy Policy:

The Privacy Policy of a website discloses how they handle user data and personal information. As a responsible scraper, it’s important to be aware of the website’s privacy practices and avoid collecting sensitive or private data without permission.

5. Terms of Attribution (if applicable):

Some websites may need attribution for their data. Review and adhere to their requirements for proper credit.

6. API Documentation (if available):

Some websites have APIs that provide an organized way to access data. APIs are better than web scraping as they ensure a smoother process and are usually allowed by the website.

Web Scraping in Action: Practical Use Cases

Let’s look at several web scraping applications to learn why must someone get such massive amounts of data from websites:

1. Price Comparison:

Ethical web scraping allows businesses and consumers to compare prices across different websites, empowering them to make informed purchasing decisions and find the best deals.

2. Market Research:

Web scraping enables ethical data collection for market research, helping businesses analyze trends, customer preferences, and competitor strategies to refine their products and services.

3. Academic Research:

Researchers can use web scraping responsibly to gather data for academic studies, enabling insights into various fields like social sciences, economics, and public health.

4. Job Market Analysis:

Web scraping job listings ethically supports labor market analysis, offering valuable information to job seekers, employers, and policymakers.

5. Social Media Analytics:

Ethical web scraping of social media data allows businesses and researchers to analyze trends and sentiments for marketing and public opinion studies.

Web Scraping Tools

To get started with web scraping, you’ll need the right tools. Here are the two primary components you’ll need:

1. Python

Python is a powerful and beginner-friendly programming language, making it an ideal choice for web scraping projects. If you haven’t already installed Python, head to the official Python website and download the latest version. Once installed, we can proceed with setting up our web scraping environment.

2. Web Scraping Library such as Beautiful Soup

Beautiful Soup is a Python library that makes web scraping easier by parsing HTML and XML documents into a navigable tree structure.

Web Scraping Tutorial – Scraping GadgetsTech.pk as example

I’ll move on to describe in detail how to extract and analyze the laptops’ lowered pricing throughout the sale time on this website.

Prerequisites:

- Windows Operating System

- Web Browser: Google Chrome

- Version of Python: 2.x or 3.x

- Python Libraries: BeautifulSoup and Pandas

Pandas is used in the web scraping code to organize, store, and manipulate the extracted data efficiently. It provides a DataFrame structure for tabular data, simplifies CSV file creation, allows easy data manipulation, and integrates well with other data analysis libraries.

Let’s start now!

Step 1: Check for the robots.txt file

To find the robots.txt file of a website, we can simply add “/robots.txt” to the end of the website’s URL. Here, the website’s URL is “ https://gadgetstech.pk” we can find the robots.txt file by visiting “ https://gadgetstech.pk/robots.txt.”

This robots.txt file indicates that web crawlers are allowed to access most of the website’s content, except for the “/wp-admin/” pages.

Step 2: Locate the URL you wish to scrape.

To get the name, original price and discounted prices of laptops for this example, we will scrape the web page can be accessed at “https://gadgetstech.pk/product-category/laptops/dell/”

Step 3: Inspecting the Web Page

Tags are typically used to nest the data. Therefore, we look at the page to determine which tag the information we want to scrape is contained beneath. Simply select “Inspect” from the context menu of the right-clicked element to view the page.

Step 4: Locate the data you need

When you select the “Inspect” tab, a “Browser Inspector Box” will open. Find the data you wish to extract.

Let’s extract the Name and Price, which are each contained within the “div” tag.

Step 5:

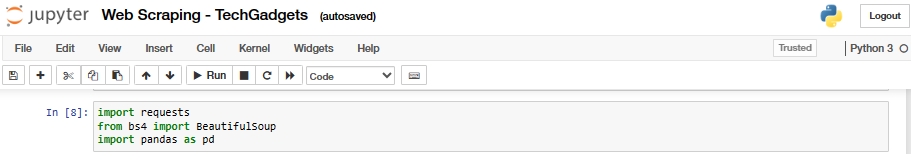

Let’s first construct a Python file. I am using Jupyter Notebook for this.

First I will import all the libraries:

Then, send an HTTP request and get the webpage content. As was already said, the information we wish to retrieve is nested within <div> tags. In order to extract the data and save it in a variable, I will locate the div tags that correspond to those class names. Consult the code below:

Step 6: Keeping the data in the required format

After the data has been extracted, you might want to save it in a format. Depending on your needs, this format changes. We will save the extracted data for this example in CSV (Comma Separated Value) format. I’m going to add the following lines to my code to do this:

I’ll run the entire code again right now. The extracted data are saved in a file called “Dell_laptops.csv” that is created in the same directory where code file is saved.

It contains the following extracted data:

This data can be helpful to compare the prices of the laptops.

Wind Up

You’ve finished your first web scraping tutorial with us using Python and Beautiful Soup. Web scraping brings up a world of possibilities for data extraction and analysis. Remember to use your gained information properly and ethically, while adhering to the website’s terms and conditions.

Visit our website at www.scrapewithbots.com and let us know how we can help if you want to use the bots to make web scraping an even more spectacular experience. Have fun scrapping!

Frequently Asked Questions

1. Can I Use Web Scraping For Commercial Purposes?

Web scraping should be used with caution if it is to be used for commercial purposes. Before using data that has been scraped for commercial purposes, make sure you have specific permission or that it is permitted by the website’s terms of service.

2. What Are Some Alternatives To Web Scraping?

If a website provides an API (Application Programming Interface), it’s often better to use it for data access as it is more structured and approved by the website. Furthermore, several websites provide data downloads in a variety of forms, which can be a good alternative to scraping.