How to Scrape Dynamic Web Page

How to Scrape Dynamic Web Page

To understand how to scrape dynamic web page you must know about a dynamic webpage. Dynamic Web Pages (also known as database-driven site) as the name suggests are capable of changing. These websites are designed to display changed content every time you interact with the web page. It depends on certain factors like the time zone, viewer of the page and the language of the area. If we look back in the history, the websites on the internet were static means stationary that always showed the same content to everyone.

The evolution of internet over time transformed web pages from static to dynamic. With scraping many business processes started growing efficiently by making more data-driven decisions. The low code or no code web scraping solutions made things smooth and convenient over time because no coding was required.

The complexity of dynamic web pages can prove a hurdle in your way of building your web scraping solution. In this article, we will explain how to scrape dynamic web page, why it is challenging to scrape them, and solutions to collect information from those websites.

Scrape Dynamic Web Page

Today, most of the websites we encounter will fall into the category of dynamic website. When you search for any product or service on a website and come across more options or different images and ads just with a click that take into account who you are is a best example of dynamic website content.

How to Scrape Dynamic Web Page?

Dynamic websites display unique content whenever visitors contact the site through server-side and client-side scripting.

Scraping dynamic web page is difficult since the content can change based on what the user wants to see. You must render the code before the page is loaded on someone’s browser. The web page’s source code does not yet contain the information you would like to scrape.

Quickie: Dynamic web page scrapping involves sending HTTP requests to a website’s server, receiving the HTML response, and parsing that HTML to extract the data, often using a library like Beautiful Soup.

Scraping dynamic web pages involves several complex steps. Unlike static web pages, dynamic pages load HTML first. Then, JavaScript populates the HTML with data. It creates difficulties since scrapers typically send requests to the server, t and the server responds with HTML.

There are different approaches to scraping a dynamic webpage:

- BeautifulSoup

- Selenium Python Library

- Import necessary parts of Selenium

- Scrape the content directly from the JavaScript

I’ll explain these approaches one by one now in this article. You can contact us if you have difficulty understanding or want further information.

1- Scrape Dynamic Web Page Beautifulsoup

Beautiful Soup is a popular Python module that parses a downloaded web page into a specific format and provides a convenient interface to navigate content.

The official documentation of Beautiful Soup can be found on ScrapeWithBots. The latest version of the module can be installed using this command: pip install beautifulsoup4.

Beautiful Soup is an excellent tool for extracting data from web pages, but it works with the page’s source code. Dynamic sites must be rendered as the web page displayed in the browser — that’s where Selenium comes in.

This process usually involves two steps:

I- Fetching the page

We first have to download the page as a whole. This step is like opening the page in your web browser when scraping manually.

II- Parsing the data

Now, we have to extract the recipe in the HTML of the website and convert it to a machine-readable format like JSON or XML.

According to the web, Beautiful Soup is a Python library used for web scraping to pull the data out of HTML and XML files. It creates a parse tree from page source code that you can use to extract data in a hierarchical and more readable manner.

This python library is called bs4, and you need to use it with an import statement at the beginning of your python code.

2- Scraping Dynamic Web Pages Python Selenium

Selenium is a free (open source) automated testing framework to validate web applications across different browsers and platforms. Here, we use Python as our primary language.

Pros: Selenium using python has the ability to execute JavaScript on the page and simulate user interactions with the page.

Selenium is one of the first big automation clients created for automating website testing. It supports two browser control protocols: the older webdriver protocol and Selenium v4 Chrome devtools Protocol (CDP).

Languages

- Java, Python, C#, Ruby, JavaScript, Perl, PHP, R, Objective-C, and Haskell

Browsers

- Chrome, Firefox, Safari, Edge, Internet Explorer (and their derivatives)

A Step-by-Step Guide to scrape dynamic web page through Python selenium.

I- Viewing the page source

The first step is to visit our target website and inspect the HTML.

II- Choosing elements

The second step is now that you have the HTML opened up in front of you, you’ll need to find the correct elements. Since the website we chose contains quotes from famous authors, we are going to scrape the elements with the following classes:

- ‘quote’ (the whole quote entry), then for each of the quotes, we’ll select these classes:

- ‘Text’

- ‘author’

- ‘tag’

III- Adding the by method and print

Step third is we already have Selenium set up, but we also need to add the By method from the Selenium Python library to simplify selection. This method lets you know whether or not certain elements are present.

IV- Choosing a browser and specifying the target

The fourth step is you can leave the driver setup like it is in the example. Just make sure to add and choose the browser you’ll be scraping. As for the target (https://scrapewithbots.com/ ), you should type it in exactly.

Let’s also set up a variable of how many pages we want to scrape. Let’s say we’ll wish to 3. The scraper output will be a JSON object quotes list containing information about the quotes we’ll scrape.

V- Choosing a selector

The fifth step is choosing from various selectors, but for this guide, we used Class. Let’s go back to the Selenium Python library for a bit. We will locate our elements using the Class selector in this case (By.CLASS_NAME).

Now that we have our selector up and running, we first have to open the target website in Selenium. The command line will do just that. It’s time to get the information that we need.

VI- Scraping

In the sixth step, it’s finally time to start doing some scraping. Don’t worry. Yes, there will be quite a bit of code to tackle, but we’ll give examples on the link and explain each step of the process here https://scrapewithbots.com/.

VII- Formatting the results

The seventh step is we’re nearing the finish line. All that’s left now is to add the last line of code that will print out the results of your scraping request to the console window after the pages finish scraping.

- pprint (quotes_list)

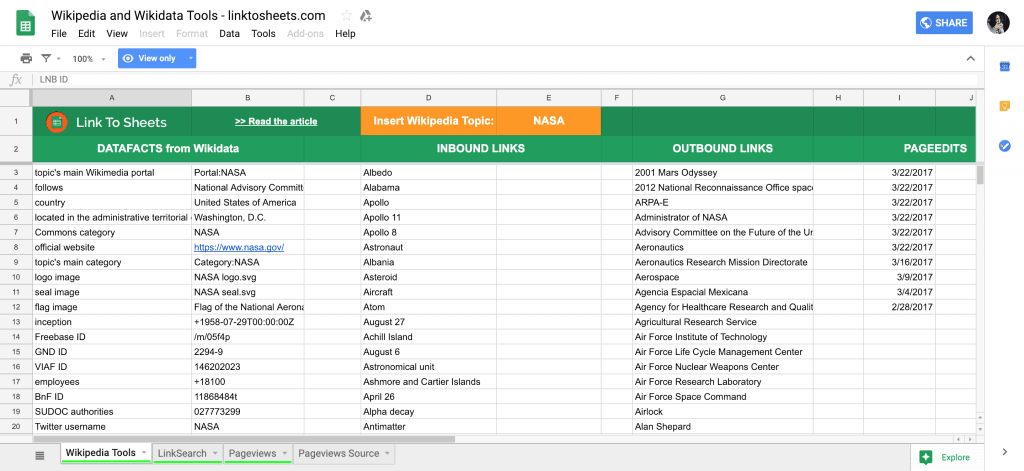

3- Scrape Dynamic Web Page Google Sheets

Can you scrape dynamic web page in Google Sheets? Yes, you can. Google sheets can be regarded as a basic web scraper. You can use a unique formula to extract data from websites, import it directly to google sheets and share it with your friends.

Here’s how.

I- Web scraper using ImportXML in Google Spreadsheets

- Open a new Google sheet.

- Open a target website with Chrome. In this case, we choose Games sales. Please right-click on the web page, which brings out a drop-down menu. Then select “inspect.” Press a combination of three keys: “Ctrl” + “Shift” + “C” to activate “Selector”. It would allow the inspection panel to get the information of the selected element within the webpage.

- Copy and paste the website URL into the sheet.

II- With a simple formula ImportXML

- Copy the Xpath of the element. Select the price element and Right-Click to bring out the drop-down menu. Then select “Copy,” and choose “Copy XPath.”

- Type the formula into the spreadsheet. =IMPORTXML(“URL”, “XPATH expression”)

Note the “Xpath expression” is the one we just copied from Chrome. Replace the double quotation mark “within the Xpath expression with a single quotation mark”.

Why Scrape Web Pages?

Dynamic web pages, like static websites, contain a lot of data to help you better understand your industry. Many dynamic websites contain more data than most static ones since the former typically has more images and social media integrations.

Here’s a list of some of the essential data you can get from dynamic web pages:

- What are your competitors doing in your industry

- Reviews of your competitors’ products

FAQS: How to Scrape Dynamic Web Page?

How can I get data from a dynamic website?

Using scraping techniques like Beautifulsoup, Python Selenium, and Google Sheets, you can get data from a dynamic website.

Wrap Up

In a nutshell, go with BeautifulSoup if you want to speed up development or if you want to familiarize yourself with Python and web scraping. With Scrapy, demanding you can implement web scraping applications in Python — provided you have the appropriate know-how. Use Selenium and google sheets if your primary goal is to scrape dynamic content with Python.

Thanks for reading. If you wish to learn more about web scraping, don’t hesitate to get in touch with us at ScrapeWithBots.